Studies of artificial general intelligence – AGI

Through the project Anandi Hattiangadi aim to investigate and answer several questions about artificial general intelligence (AGI). Some of these are: What is AGI? What does it mean for AGI to emerge in a system? What can we learn from the risks associated with emerging AGI?

“Cogito machina” – Latin for “I, a machine, think” – was suggested by ChatGPT as the title for this project, prompting Anandi Hattiangadi to reflect further. Can ChatGPT think? Did it understand the question?

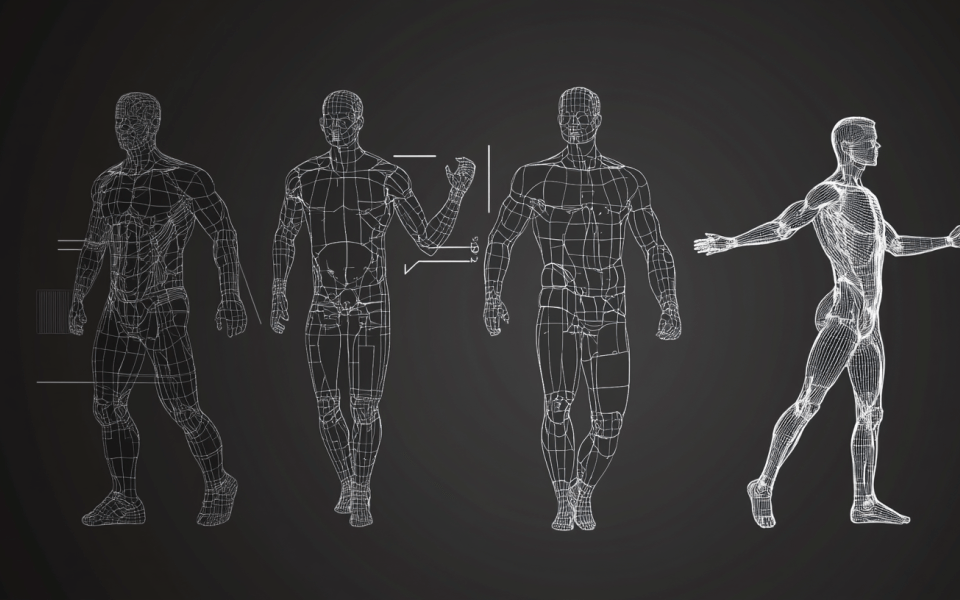

Until recently, thinking machines were regarded as science fiction, but in recent years technological strides in the development of large language models (LLMs) such as ChatGPT have revolutionized the capabilities of these models. The latest generation of LLMs can write poetry, solve advanced mathematical problems, and teach you how to bake, leading some to claim that these models now show clear signs of artificial general intelligence (AGI) and genuine language understanding. Others argue that the systems are merely “stochastic parrots” that mimic intelligent behavior without possessing true intelligence.

These systems are still merely artificial neural networks, trained on huge amounts of data to predict the most likely continuation of a text flow with growing accuracy. Although ChatGPT can teach you how to bake a cake, it has never tasted one, let alone baked one.

Researchers consider it essential to ascertain whether AI systems will demonstrate AGI in the near future. If AI systems approach human-level intelligence, they could soon have the ability to set their own goals and seek power over humans.

In light of rapid technological advancements, some researchers worry that artificial hyperintelligent beings will emerge and ensure the extinction of humanity. Is this fear unfounded and alarmist? Or would we be naive not to take it seriously? How much of our limited time and energy should we spend reducing the risks to human life posed by emerging AGI? On the other hand, if AI systems demonstrate AGI in the very near future, we will need to cope with AI systems with beliefs, desires, hopes, and fears, which, in moral terms, are very similar to humans, as they will, like us, have legitimate interests, rights, and obligations.

The problem is that there is no consensus on what AGI is, what is required for a system to demonstrate it, and how we can know whether AGI is emerging in a system. Many benchmarks used today to determine AGI are superficial and only test performance, failing to distinguish imitation from genuine AGI.

Anandi Hattiangadi aims to fill the knowledge gap about AGI by answering the following questions:

- What is AGI?

- What is required for a system to demonstrate AGI?

- What does it mean for AGI to emerge in a system?

- How can we know that AGI is emerging in an AI system?

- Is AGI emerging in current AI systems or those of the near future?

- What can we learn from the risks associated with emerging AGI?

Project:

“Cogito machina: Investigating the emergence of artificial general intelligence”

Principal Investigator:

Professor Anandi Hattiangadi

Institution:

Institute for Futures Studies

Grant:

SEK 5 million